Greed is bad

Authored . Last modified .

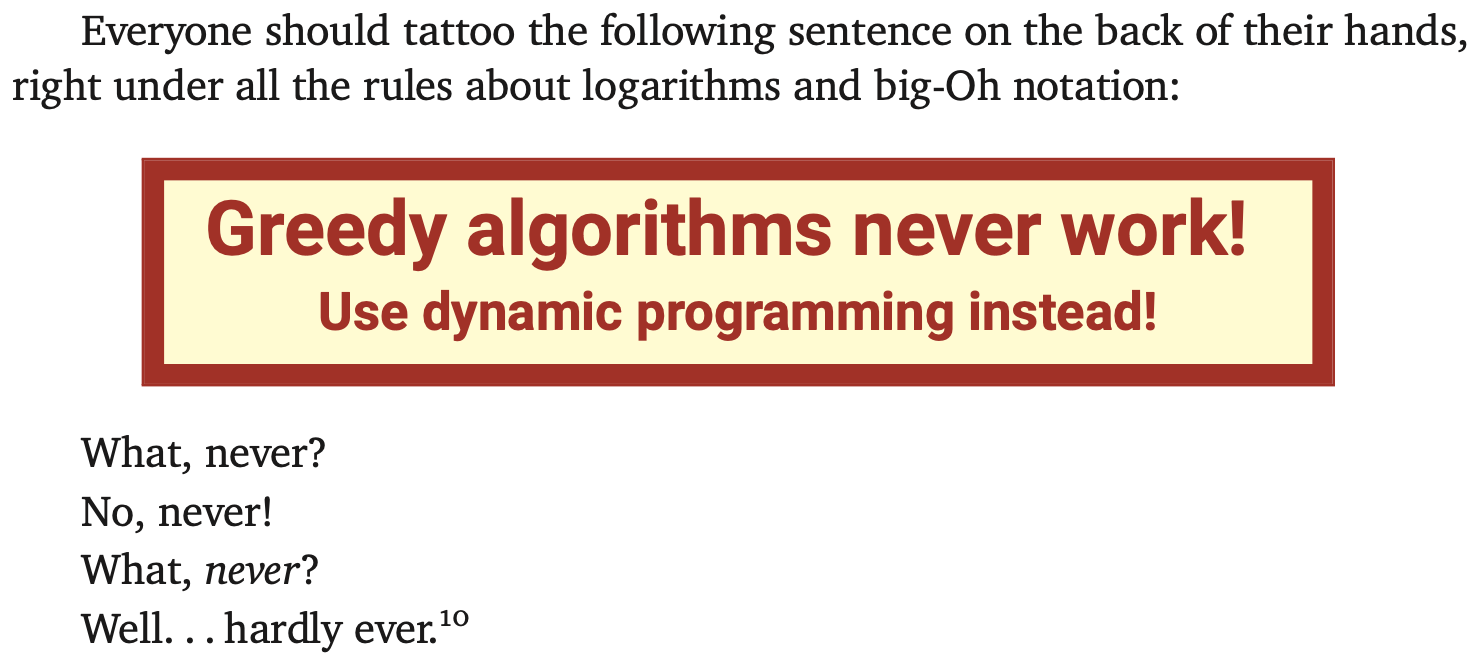

Jeff Erickson's Algorithms has a pithy paragraph on the use of greedy algorithms in his chapter on dynamic programming.

Everyone should tattoo the following sentence on the back of their hands, right under all the rules about logarithms and big-Oh notation: Greey algorithms never work! Use dynamic programming instead.

The effect is better in the actual textbook.

This past fall when I was interviewing for internships I received the same problem statement twice. The first time I fumbled through a greedy solution that maintains two indices into a list — or two walking dudes as Professor Lokshtanov would say — and both times I wasn't able to justify my solution. The second encounter was especially frustrating because I had the solution in hand and just needed to justify it to my interviewer, who I told I'd seen the problem before.

Greedy algorithms are hard to justify because in my experience they're usually justified in retrospect. Some line of intuition feels correct and appears to work, and you're forced to double back and prove it. My discomfort with them is due to this lack of incremental footholds as you're working through the problem, and also that I find greedy proofs challenging.

In this post I want to give an example of deriving a "greedy" solution from one that uses a recurrence, which allows you to take baby inductive steps.

Jump game #

You are given an integer array

nums. You are initially positioned at the array's first index, and each element in the array represents your maximum jump length at that position. Returntrueif you can reach the last index, orfalseotherwise.

This is LeetCode's Jump Game, problem #55. It's tagged as a "greedy" problem, and appears in the "greedy" category in NeetCode's curated list of LeetCode problems.

We first normalize the array elements so that we don't have to worry about leaping off the right end of the array. I'm using 1-based indexing for this problem because I found it easier to reason about.

def normalize_hops(hops: list[int]) -> None:

n = len(hops)

for i, hop in enumerate(hops, start=1):

hops[i - 1] = min(hop, n - i)The array elements are unconstrained (0 <= nums[i] <= 10^5), so an input like [8, 8, 8, 8]

results in a normalized input of [3, 2, 1, 0].

Thankfully, a recurrence from here is not so tricky. If we let

The element at index

We also know that

Because each

def can_jump_quadratic(hops: list[int]) -> bool:

n = len(hops)

can_reach = [ False for _ in range(n) ]

can_reach[n - 1] = True

normalize_hops(hops)

for i in range(n - 1, 0, -1):

reachable = range(i + 1, i + hops[i - 1] + 1)

can_reach[i - 1] = any(can_reach[j - 1] for j in reachable)

return can_reach[0]Optimizing #

I'd be content with this solution if it weren't for the "greedy" tag on this problem, which

indicates that clever people have done it in linear or sub-linear time. Squinting at our code, our

first suspicion is that the generator expression is the line causing our undesirable extra factor

of

any(can_reach[j - 1] for j in reachable)One thing we do know is that if we can jump to an index, then we can jump to any index less than it,

provided we're not jumping to the left. This hints that we really only care about the leftmost index

from which there is a sequence of hops that will take us to the goal, because we only need to be

able to jump at least that far. We'll call

def can_jump(hops: list[int]) -> bool:

n = len(hops)

can_reach = [ False for _ in range(n) ]

can_reach[n - 1] = True

normalize_hops(hops)

l = n

for i in range(n - 1, 0, -1):

if i + hops[i - 1] >= l:

l = i

can_reach[i - 1] = True

return can_reach[0]This code looks very similar to the greedy solutions posted by the community, and was obtained just by making a single optimization to our tabular solution: we just replaced the generator expression.

The bait-and-switch #

You could argue that we've changed our recurrence, and you'd be right: we now have a function

I think this process is really appealing, however, because the tabular dynamic programming solution allowed us to immediately read off the optimization necessary for the linear-time algorithm. In summary, I think greedy algorithms have the tendency to leap to cleverness too quickly (excuse the pun). Induction gives you a safety net to explore a variety of solutions, and even if you fail to optimize you'll still come away with some solution.

Steven Skiena has a famous quote,

I’ve heard it said that a computer scientist is a mathematician who only knows how to prove things by induction. This is partially true because computer scientists are lousy at proving things...

- Previous: Queues in JavaScript